Collective vs. Artificial Intelligence: What We Learned by Sending Dogs to Court

Federico Ast investigates the limitations of AI in the future of the legal industry and what role blockchain-based dispute resolution plays in this future.

Is there a future for the legal profession? Will lawyers be replaced by artificial intelligence tools that can do legal work faster and at a fraction of the cost?

Early attempts to use artificial intelligence in law go back to the 1960s, when Hugh Lawford, a professor at Queens University from Toronto, developed the QUIC/LAW database.

But the real explosion of AI only came in recent years, as the digital economy enabled us to build larger datasets to train algorithms. A number of corporate projects and startups are making extraordinary progress in the use of machine learning in the legal industry.

Some highlights:

- JP Morgan’s COIN (short for Contract Intelligence) is a program which can read 360,000 hours worth of documents in just a few seconds. This quick and low-cost process significantly cut costs in the bank's lending due diligence procedure.

- In February 2018, the Israeli company LawGeex organized a challenge where their AI algorithm faced 20 US lawyers for detecting problematic clauses in 5 confidentiality contracts. The level of accuracy of lawyers was 85%. The accuracy of the AI was 94%. It took lawyers 92 minutes on average to analyze the contracts. The AI did it in 26 seconds.

- A number of startups are developing machine learning technologies to predict the result of trials and optimize litigation strategies. Some of the leaders in the field of legal analytics include Luminance, Premonition and Lex Machina.

The work of lawyers basically revolves around pattern recognition. It's about seeing how some situation fits into some pattern described by the law. Since AI also excels in pattern recognition (but much faster and cheaper than humans), some believe it will kill off lawyers.

The experience we've had at Kleros with our Doges on Trial pilot and our Token Curated List Dapp may give us some insight about the need for human involvement in dispute resolution.

Is This Clearly a Doge?

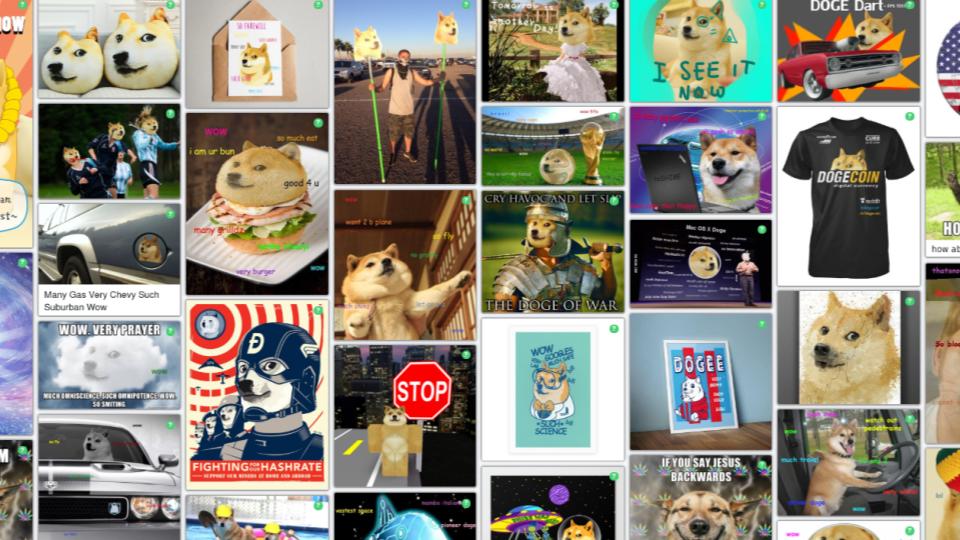

Our Doges on Trial pilot rewarded users who submitted non-repeated images of the Doge meme onto a list. Jurors would decide whether an image should be accepted or not. This is a sample of the submitted pictures.

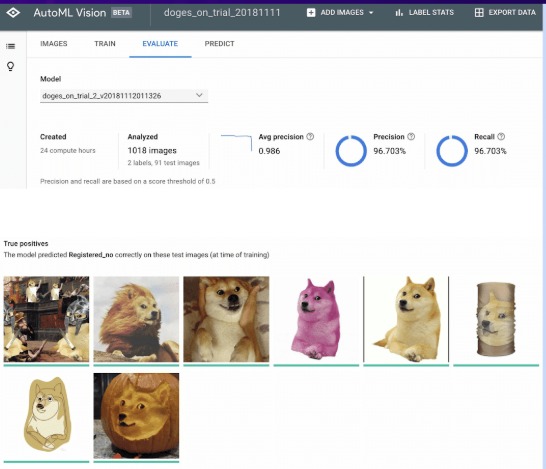

Image recognition technology has made huge progress over recent years and a big part of the decision-making process of accepting images could be automated.

The experiment also rewarded 50 ETH to whoever was able to sneak the image of a cat past Kleros' jurors. Users submitted a large number of cat images which were correctly identified by jurors (to learn more about these attempts, see this post). Many of these could also be identified by algorithms.

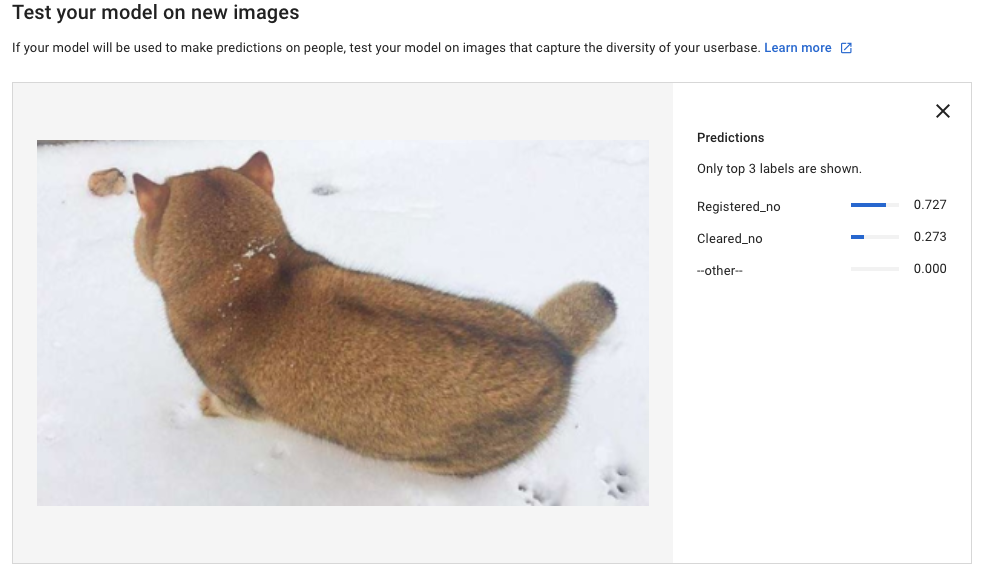

And then someone sent the following:

The image went unchallenged past the jury (hey, it looks like a Doge, right?). The submitter then sent the picture below and argued that the animal in the previous image and the one below are the same. Hence, he continued, as he had succeeded in sneaking a cat into the list, he was eligible for the 50 ETH reward.

The payout policy expressed that, to be eligible for the reward, the image had to "clearly display" a cat. It could be argued, of course, that the initially submitted image didn't "clearly display" a cat.

Image recognition software was pretty confident that this was a Doge:

However, one could argue that the image was actually from this dog (instead of a cat) taken from this angle:

Since we couldn't find an agreement on this, we decided to make a Kleros escrow trial for the 50 ETH between Coopérative Kleros (which held that the image didn't comply with the payout policy) and the submitter (who argued that it did).

Jurors were asked to answer the question: does the image comply with the payout policy of "clearly" displaying a cat?

Jurors ruled that the image did not comply with the policy. Coopérative Kleros won.

The Limits of AI

The "apparently silly" case of the "Doge in the Snow" dispute highlights some critical points about the limits of AI in a context of digital disruption of the legal industry.

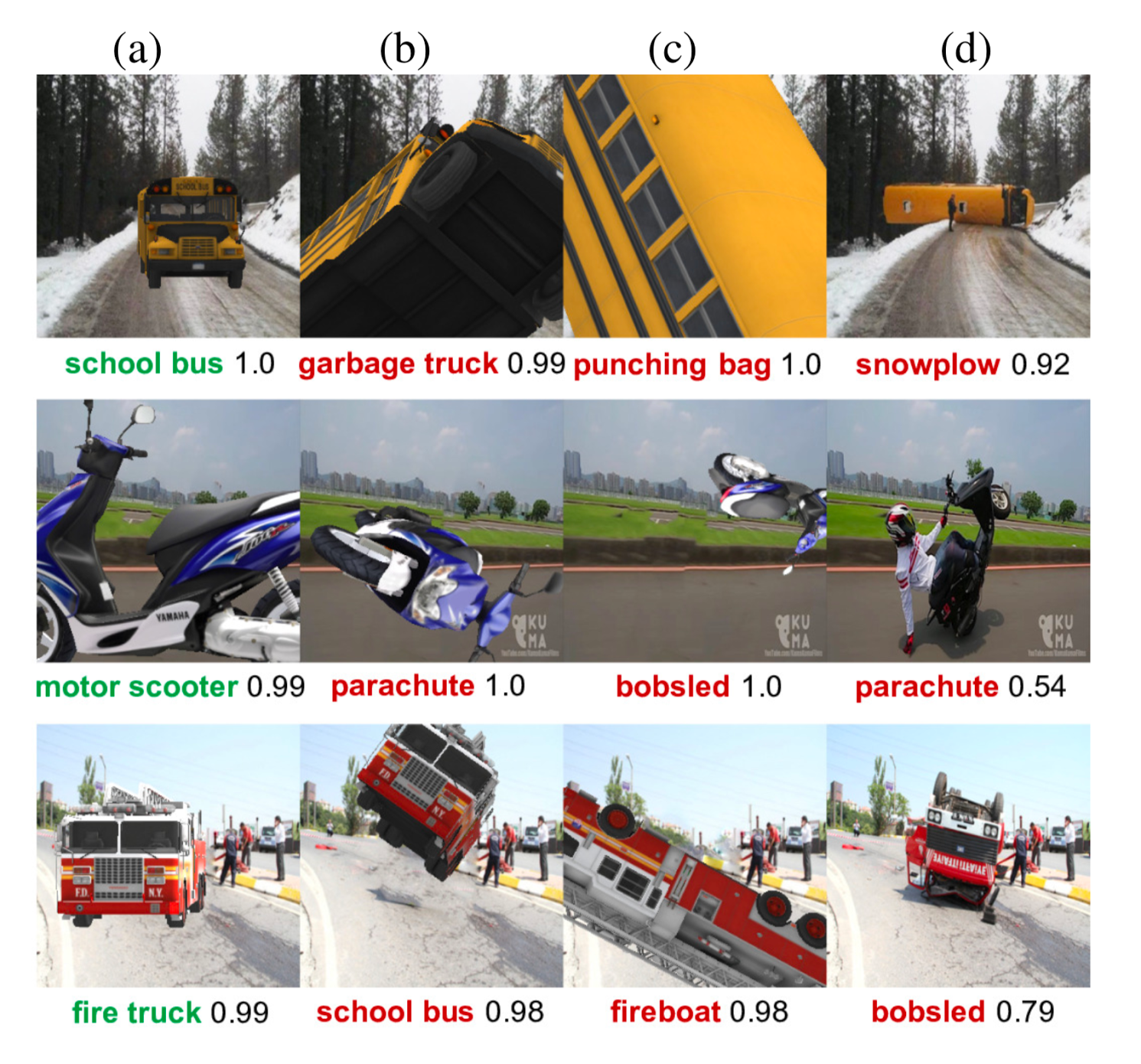

It is beyond doubt that AI is immensely useful for processing large amounts of data. However, it also has limitations. For example, researchers found that Google's image recognition AI could be fooled by something as trivial as ordinary objects placed in unusual positions, as shown in the following image.

The limits of artificial intelligence have already been tested by big Internet platforms in the context of content moderation.

YouTube uses algorithms to identify videos potentially infringing copyright. Social media companies use AI to detect comments which violate terms and conditions of service. E-commerce platforms use AI to identify fake reviews and to take down listings that violate conditions of service.

But a large number of situations generate false negatives or positives in identification algorithms.

No matter how far technology goes in rooting out fake reviews, AI could always fall short of detecting all the tricks played by scammers without the helping hand of a human to discern borderline cases. In addition to their algorithms, YouTube also employs over 10,000 human content moderators. Facebook has around 30,000 human reviewers, surveying a whopping 510,000 comments, 293,000 statuses, and 136,000 photos being uploaded every 60 seconds.

The coming big challenge is "deep fake content". Deepfake is a "technique for human image synthesis based on artificial intelligence. It is used to combine and superimpose existing images and videos onto source images or videos using a machine learning. Deepfakes have been used to create fake celebrity pornographic videos or revenge porn".

AI tools are being developed for automated Deepfake detection. But this kind of validation may also require some type of human participation.

The Boundaries of Collective and Artificial Intelligence

The experience of big tech platforms solving user disputes and moderating content can be a preview of how artificial intelligence may impact the future of law.

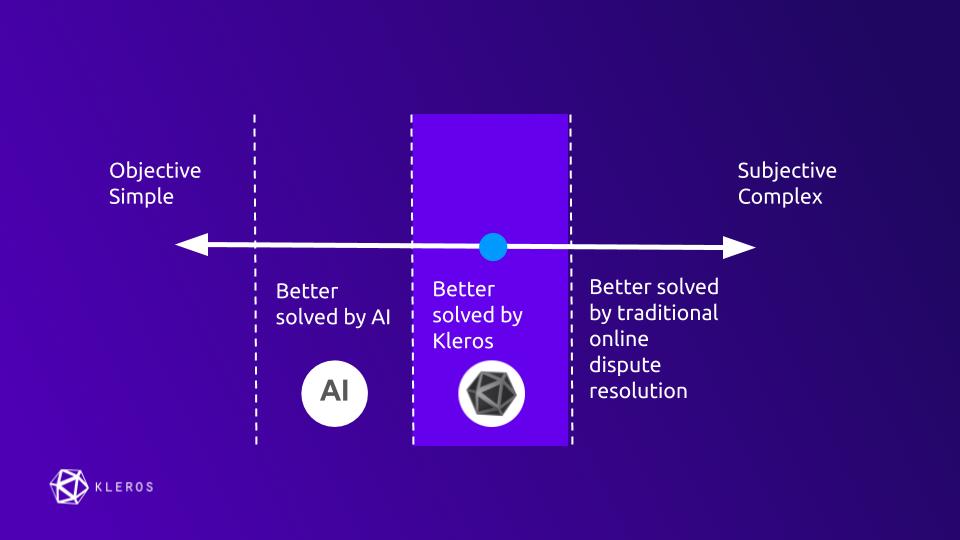

Over time, AI will probably be unbeatable in simple and objective decisions. Automation will lead to a cheaper and faster decision making than any human based procedure could ever provide.

Collective intelligence methods such as Kleros will be especially useful for decisions where a great deal of transparency is needed (because of conflicting interests of parties) but which is too complex to be handled by AI. When human intervention is needed to appreciate the nuances of a situation, collective intelligence trumps artificial intelligence.

And then, there are other situations involving even higher levels of subjectivity and complexity. For example, disputes where a conflict of values is present or where stakes are extremely high may not be a fertile terrain for the use of artificial or collective intelligence. People may accept the algorithm or the crowd for settling an e-commerce dispute over a couple hundred dollars, but are unlikely to have them decide over disputes that affect fundamental rights (e.g., sending someone to jail). This is probably where traditional dispute resolution systems and lawyers will still have an edge, at least in the foreseeable future.

Of course, these systems may have digital enhancements such as incorporating online communication for increased efficiency and reduced need for physically go to court. But, essentially, the procedure will still look like traditional court proceedings.

The disruption in the legal industry is just starting and new technologies are coming into play, each addressing a specific type of problem: AI solves mechanical and repetitive tasks, crowdsourcing resolves cases with higher complexity and traditional methods are always there when stakes are really high.

Since you're here, why not become a Kleros Juror? It's just a click away!

Join the community chat on Telegram.

Visit our website.

Follow us on Twitter.

Join our Slack for developer conversations.

Contribute on Github.