Sophie Nappert - Arbitration in the Age of Algocracy: Who Do You Trust?

Sophie Nappert takes us on a journey to the relationship between humans and algorithms in 21st Century arbitration and how to keep the perils of algocracy in check.

Sophie Nappert (1) is an arbitrator in international disputes, based in London. She publishes and lectures on the impact of technology on arbitral decision- making and is enthusiastic about the development of decentralized justice.

Technology up to now has been at the service of the arbitration process, assisting with the accuracy and speed of procedurally repetitive or voluminous tasks, such as the review and classification of documents; and the development of matrices for the calculation of quantum (jargon for the monetary outcome of the dispute), typically involving the analysis of future profits on a discounted cash flow basis.

By now, one would have expected technology to have had a real, positive impact on bringing down the procedural time and costs of arbitration. The reality is that, if anything, time and costs are on the rise. One reason for this apparent mismatch might be that, in attempting to draw a parallel between the use of technology in proceedings and the time and costs of those proceedings, we are drawing the wrong parallel.

The right parallel to draw is between the use of technology and the processing of instantaneous information. And we must not confuse the immediacy of acquiring information with our capacity to sift through that information, digest it, and use it to inform our decision-making.

There is no technology to accelerate that latter process when it is carried out by humans. Immediacy of communication and information does not necessarily translate into faster, more accurate decision-making. This may go some way towards explaining why international arbitration has been slow to embrace technology. (2)

We are now at a stage where technological development enters the realm of the substantive, hitherto the preserve of human decision-makers: assessing the truth of a witness’s testimony on the basis of an analysis of her voice intonations and facial micro-expressions; or generating in record time a reasoned decision that takes into account prior precedent, as illustrated by the Prometea initiative, an algorithm devised to assist the Public Prosecutor’s Office in Buenos Aires to clear the backlog of administrative law and fiscal cases.

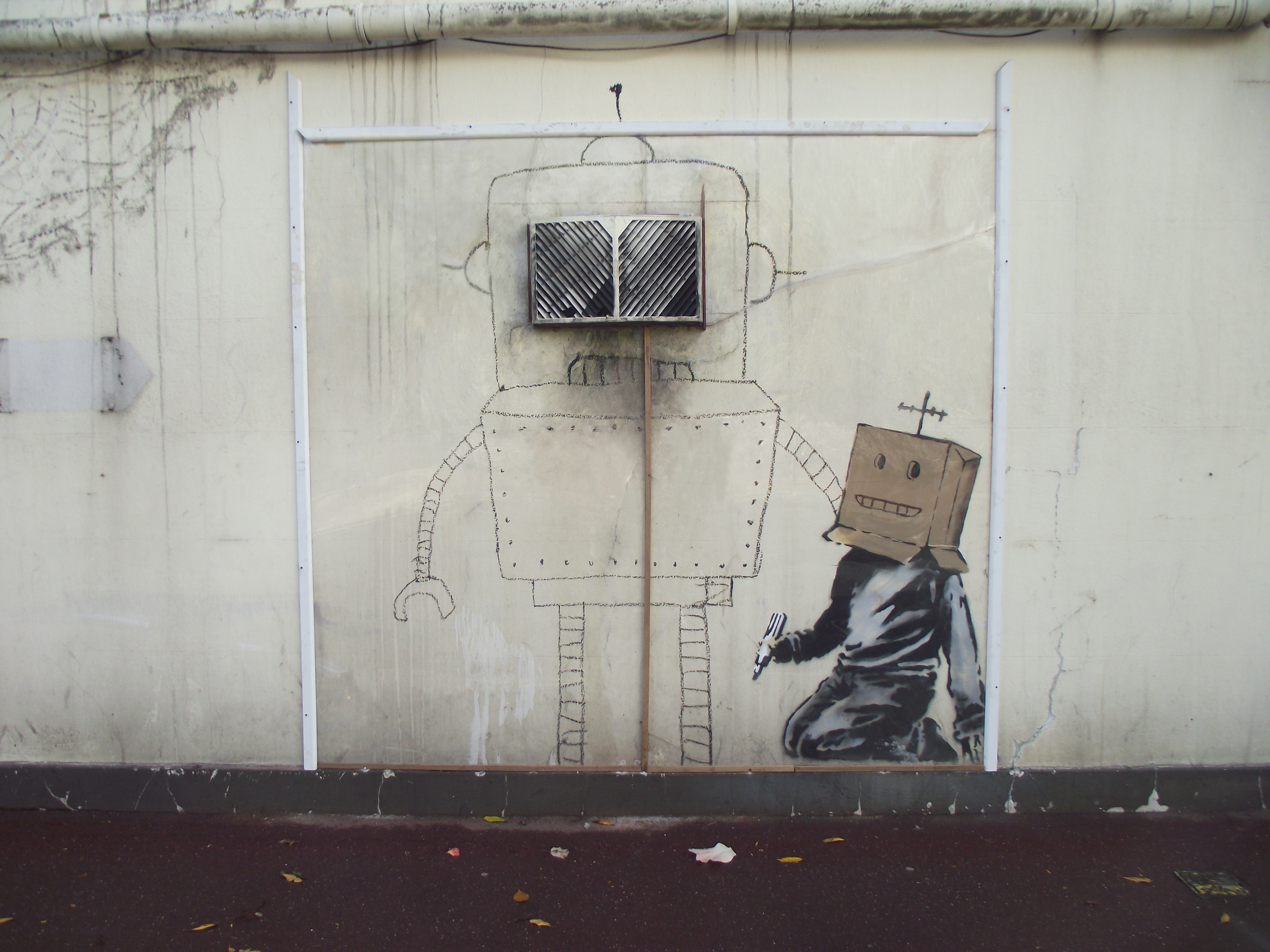

We are, in other words, entering the age of what has been aptly described as “Algocracy”, or governance by algorithms. The challenge that this presents, for arbitration as for many other processes currently dominated by human decision-making, is whether, in allowing algocracy to flourish, we are creating decision-making processes that will constrain and limit opportunities for human participation. (2)

The list of questions that threaten soon not to be hypothetical is illuminating. Here are a few:

- If technology exists that could determine a witness’s veracity with unerring accuracy, would we be forced to use it?

- Would arbitrators face a challenge from a party for using that technology because the party felt that the arbitrators were abdicating their responsibility to adjudicate the case to a machine?

- Would arbitrators face a challenge for not using that technology because the party felt that arbitrators ought to use every tool at their disposal to determine the truth?

- Would counsel hesitate to put forward certain witnesses on the basis that they might not survive the scrutiny of technology?

- Would arbitrators draw an adverse inference from a witness willing to testify but unwilling to use the machine?

Returning to arbitration, the answer to the above questions leads to more core questions about what we want from the arbitral process, and what it can deliver: A flawlessly logical, entirely dispassionate outcome? Unanswerable legal reasoning (and if so, query the need for appeal or review in tomorrow’s dispute resolution world)? A search for the truth? What is the place of equity in the technological world?

Does technology need to be kept in check and confined to its current role as assistant to the decision-making process; or do we allow it to take the process over and, by shepherding the human part of it through those areas where the mind is eminently fallible (e.g. areas involving complex mathematical calculations or areas involving cognitive bias), confine the humans to decide only those issues where humans can still have an edge, such as issues involving equity, fairness or empathy? Would this amount to a better process in the end?

Technology also causes us to rethink the tenet of the individual nature of the arbitrator’s mandate, and how much of it can be relinquished without delegating the function altogether – much like it has been said that writing one’s own awards is the safeguard of intellectual integrity, what happens if technology gets ahead of you, leads you by the hand through true or false witness testimony or algorithmic computation and presents you with an inevitable conclusion?

By way of a practical illustration of this last challenge, I am currently co-chairing a task force looking at the treatment of allegations of corruption in international arbitration. We plan to examine whether arbitrators could derive assistance from an algorithm programmed to recognise red flags (indicators of corruption) in a given set of factual circumstances, and to determine the percentage chance of corruption being, or not being, present.

Something along those lines has already been conceived by researchers at the University of Cambridge, who developed a series of algorithms that mine public procurement data for signs of the abuse of public finances (e,g, an unusually short tender period; low number of bidders in a competitive industry; unusually complex or inaccessible tender documents). If the algorithm returns, say, a result showing a 65% probability of corruption being present, what scope remains for the human arbitrator to disagree, and on what basis?

One thread running through these important questions is that of trust, a most intangible, and most human, attribute. In selecting arbitration as an alternative to the judicial system, disputants make an active choice based on the trust that they place in arbitration to provide a process and outcome suited to their industry, or values. (3)

Legal sociology scholarship posits that trust is built upon the perception of the fairness of a dispute resolution system, which itself is based on a belief that the system is neutral, respectful, representative and demonstrates care towards the litigant. This necessarily implies that human trust is earned over time, by the repeated demonstration of accuracy and fairness.

Historical notions of trust have been upended by the decentralization underpinning blockchain and smart contracts. It has been said that the advent of smart contracts raises a new set of trust issues, re-allocating the trust traditionally placed in institutions (and, for arbitration, “trusted third parties”), to a system premised on code and the powerful actors within this system. (4)

It has also been stated that smart contracts may be ill-suited to long-term contractual relationships that “entail the possibility of greater uncertainty due to the natural limitation of human foresight and a greater number of permutations, including external factors beyond the control of the parties. Such relationships entail high levels of interpersonal trust.” (5)

The resolution of disputes over these long-terms relationships also typically involves consideration of appropriateness, fairness and equity (one classic example is gas price review disputes) (6), falling squarely within those areas identified above as particularly suited to the human mind rather than to intelligent machines.

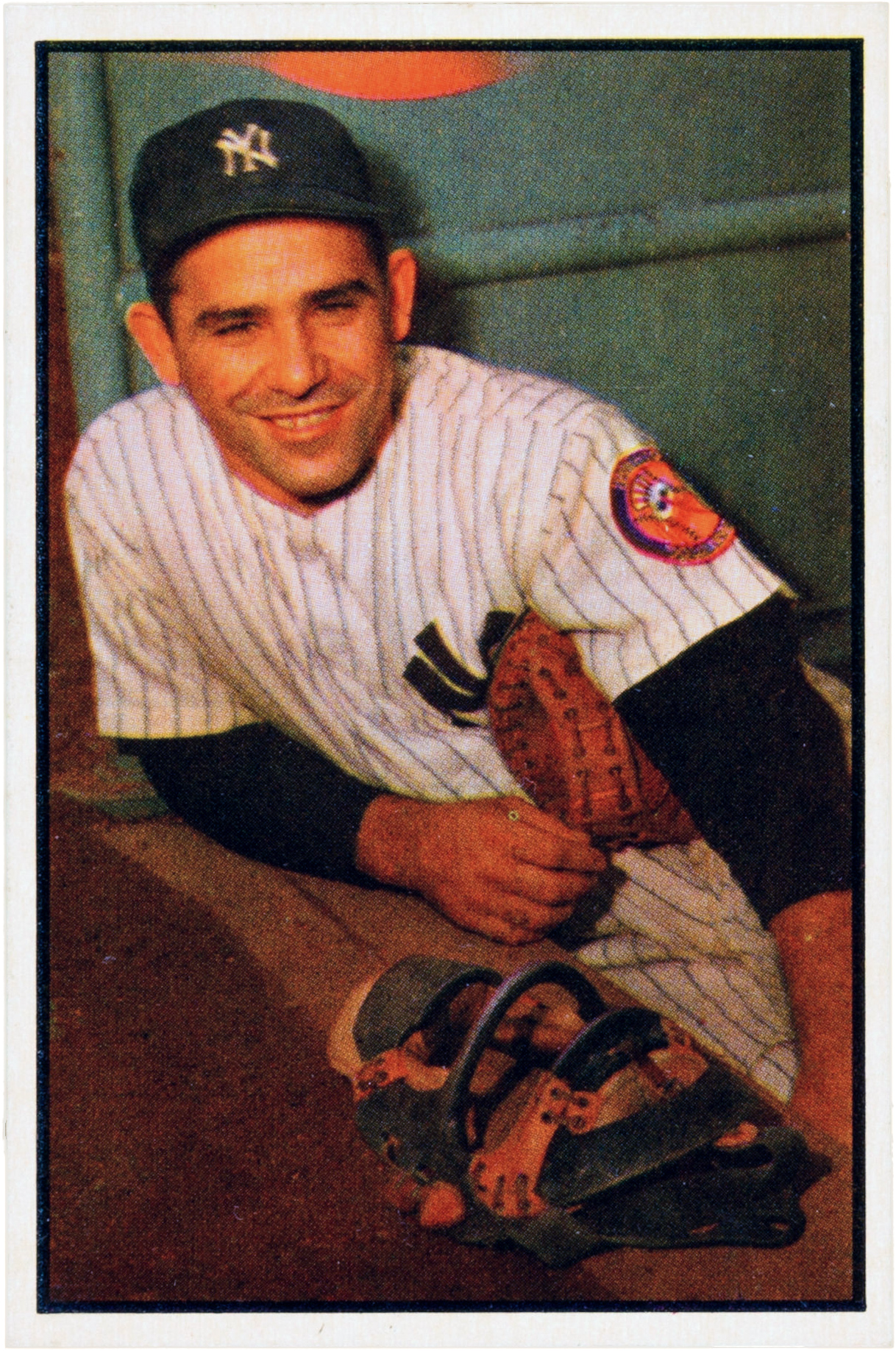

Yogi Berra, the baseball player and inadvertent apostle of the statement of the obvious, once quipped that “predictions are hard to make -- especially about the future.”

At this stage, however, we can say with some certainty that, as the blockchain and smart contract ecosystem develops with a view to becoming more mainstream, human disputants will continue to trust other humans – Kleros’ jurors - to decide the disputes that arise in this new ecosystem.

As greater familiarity with the disintermediation of human interaction sets in, there is a good case to be made for a fruitful partnership between intelligent machines and the human mind, keeping the perils of algocracy in check.

(1) I thank Mihaela Apostol and Avani Agarwal for research assistance. We leave aside for these purposes the phenomenon of documents-only arbitration, such as domain name disputes under the auspices of ICANN, and the like.

(2) (…) a system in which algorithms are used to collect, collate and organise the data upon which decisions are typically made, and to assist in how that data is processed and communicated through the relevant governance system. This can be done by algorithms forcing changes in the structure of the physical environment in which the humans operate (…). Such systems may be automated or semi-automated. Or may retain human supervision and input.” J Danaher, “The Threat of Algocracy: Reality, Resistance and Accommodation” (2016) 29:3 Philosophy & Technology 245-268, available at https://www.researchgate.net/publication/291015337_The_Threat_of_Algocracy_Reality_Resistance_and_Accommodation

(3) The concept of arbitration “is a simple one. Parties who are in dispute agree to submit their disagreement to a person whose expertise or judgment they trust. They each put their respective cases to this person – this private individual, this arbitrator – who listens, considers the facts and the arguments, and then makes a decision. That decision is final and binding on the parties; and it is binding because the parties have agreed that it should be, rather than because of the coercive power of any State.” N Blackaby and C Partasides with A Redfern and M Hunter, Redfern and Hunter on International Arbitration (OUP, 2015).

(4) Mimi Zou, Grace Cheng, Marta Soria Heredia, “In Code(r) We Trust? Rethinking ‘Trustless’ Smart Contracts”, 7 June 2019, available at: https://www.law.ox.ac.uk/business-law-blog/blog/2019/06/coder-we-trust

(5) Ibid.

(6) “(…) most price review clauses in Asian LNG contracts are general in nature and say very little (…), if anything, about the factors which should be taken into account in a price review. Industry evidence suggests that some Asian price reopeners refer to ‘various factors’ or broader economic considerations (like ‘levels and trends in the price of oil and gas in Asia-Pacific region’) which should guide price review discussions. In addition, or in the alternative, some Asian LNG contracts require that the price adjustment shall be ‘appropriate’, ‘reasonable’, ‘equitable’, ‘fair and justified’, or apply a combination of these, or similar, thresholds.” Agnieszka Ason, “Price Reviews and Arbitration in Asian LNG Markets”, The Oxford Institute for Energy Studies (April 2019), available at https://www.oxfordenergy.org/wpcms/wp-content/uploads/2019/03/Price-reviews-and-arbitrations-in-Asian-LNG-markets-NG144.pdf

Join the community chat on Telegram.

Visit our website.

Follow us on Twitter.

Join our Slack for developer conversations.

Contribute on Github.