Choosing Parameters in Kleros Curate

Our Director of Research, Dr. William George describes how to design parameters for Kleros Curate.

Designing a high quality list is challenging. Here is some practical advice to help you on your way.

When deploying a new token curated registry in Kleros Curate, a number of parameter choices need to be made. When creating your list, you basically need to make all of the choices that the Kleros team made when deploying applications such as the T2CR.

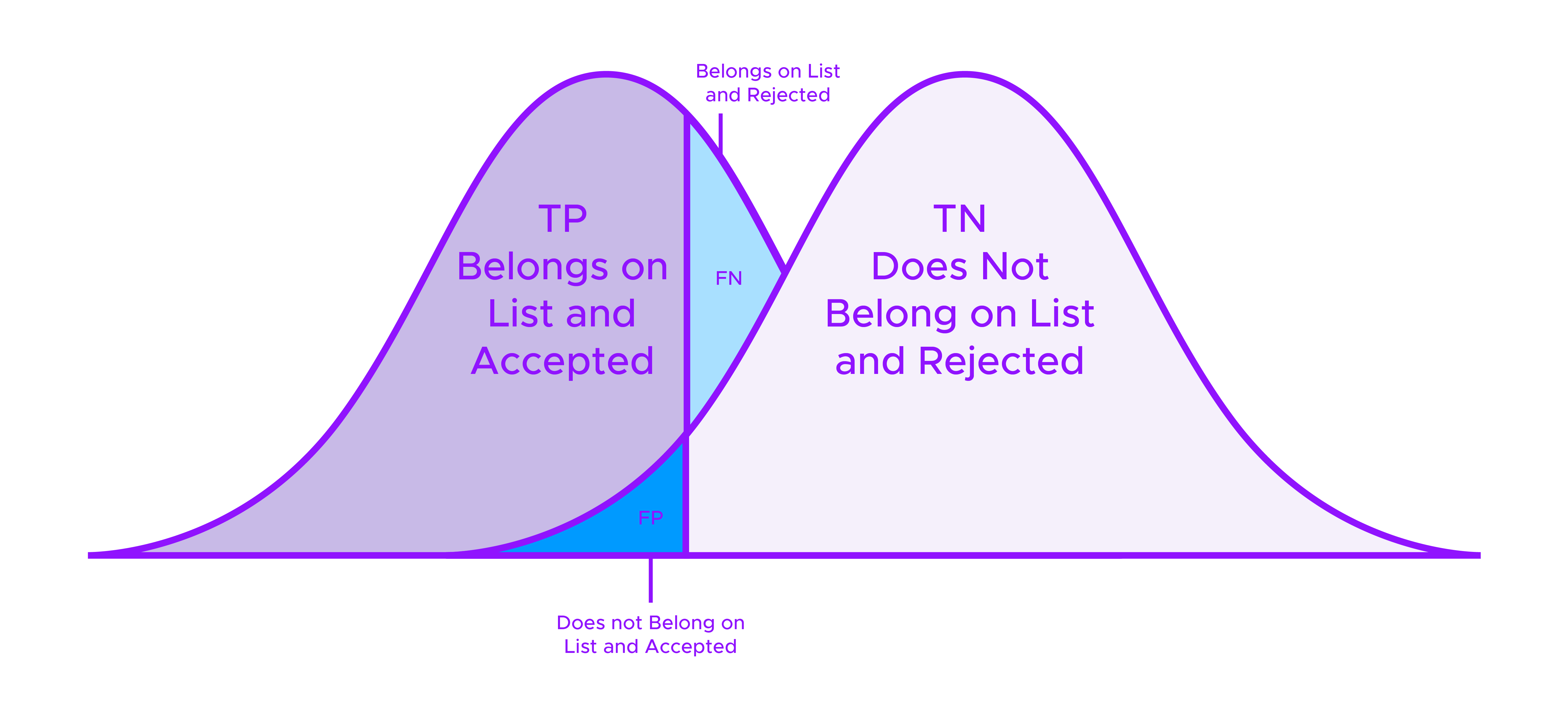

As we will see in detail below, a lot of the choices that you must make touch at a balance between maintaining a very high integrity list – where it is exceptionally rare that any element does not conform with its required criteria – and at the same time not putting in place so many safeguards that the list is difficult to submit to and largely empty. This is similar to balancing for Type I and Type II errors when designing an experiment.

In this article, we will walk you through some of the points to keep in mind when creating a list with Kleros Curate. Should you require further assistance, you can always contact us via Slack or in our dedicated Telegram for Curate Support.

Basic Parameters

The Primary Document: Defining the Acceptance Criteria

Fundamental to any new list is its "Primary Document". This is a set of policies or criteria that are shown to jurors to provide them with a framework to decide whether an element belongs on the list. These policies should be easy to understand and clearly relate to the stated goal of the list. Writing a good Primary Document is subtle and depends heavily on the specific application of the list.

Here are a few things to keep in mind while writing your Primary Document:

- Carefully reflect on what types of elements should be on your list.

- Be specific.

- Write clearly; consider breaking criteria into digestible bullet points.

- Try to catch spelling and grammar errors in your primary document that might cause confusion before you submit.

- Keep your criteria as simple as possible, while nonetheless anticipating problematic edge cases.

Generally speaking, when writing the Primary Document, try to put yourself in the shoes of jurors who would have to decide whether some item should be accepted in the list or not: what set of criteria would make your decision easier?

Let's see an example of a good and a bad Primary Document for a list of alpaca socks.

Good Primary Document

- Must be socks.

- Must be made out of >80% Alpaca wool.

- Socks worn by Alpacas that do not meet the thresholds for percentage of Alpaca wool should be rejected.

Bad Primary Document

- Foot garments of which a large amount of the material consists of the epidermal growths of quadrupeds native to the Andean region of South America.

Choice of Court

Another fundamental choice for your new list is what Kleros court it will use as its arbitrator. You want the jurors that are ruling on whether an element belongs on the list or not to be likely to make correct decisions. However, different Kleros courts require that would-be jurors have different skills. So you want to pick a court where jurors will have the skills required to curate your list.

For example: if you are using your list for content moderation on a new social network platform, you probably want to choose the "Curation" court, which is designed for micro-tasks, particularly around content moderation.

If you are building a marketplace for advertising or publicity services, and you want to have a curated list of which sellers of such services meet minimum standards, you might want to choose the "Marketplace Services" court.

If you want to maintain a curated list of bug-free Dapps, you would want to choose the "Blockchain Technical" court, where jurors are expected to be able to analyze cases regarding Solidity bugs.

Moreover, different courts may have higher or lower arbitration fees and are generally calibrated for harder or easier tasks. A list that is used for eliminating profanity in content moderation might require fairly little effort per case. On the other hand, submissions to a list used to determine whether crypto-projects meet the listing requirements of an exchange might take hours to analyze.

You should gauge the average effort that will be required in disputes arising from your list and choose an appropriate court. If you choose a court that is calibrated for much easier tasks than what your list requires, jurors will likely either refuse to arbitrate or will only give a passing glance to your cases, resulting in a lot of variability in your list.

Among currently existing courts, the "Curation" court has the cheapest fees and is calibrated for relatively small, unskilled tasks that should only normally take a few minutes to analyze.

The "Blockchain Non-Technical" court might be well calibrated for tasks that take around 30 minutes to analyze, particularly if broad knowledge of the blockchain/crypto ecosystem is useful in this analysis.

The "General" court can serve as a catch-all for tasks that do not fit well into other courts. However, while its fees, which are somewhat higher than those of the "Blockchain Non-Technical" court, were set to be viable for a broad selection of disputes, they may or may not optimal choices for your application.

There are also a few courts with higher fees for specialized tasks such as the "Token Listing" and "Blockchain Technical" courts. You might consider using one of these courts if your application calls for these specialized skills.

Note that it is possible that none of the existing Kleros courts are appropriate for your use case. Then, you might want to submit a proposal to the Kleros governor to have a new court created.

Anyone can perform any of these steps. However, if you have difficulty determining which court is appropriate for your list or need help for creating a new court, you can contact the Kleros team via Slack or in our dedicated Telegram for Curate Support.

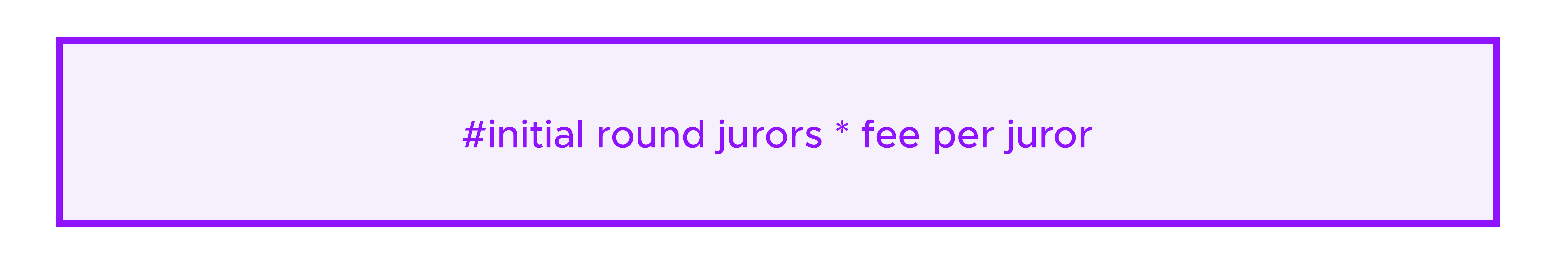

Number of Jurors

The next thing that you should determine is how many jurors will be drawn in the first round of any eventual disputes involving your list. Most Kleros Dapps so far have drawn three jurors in the initial round of any dispute. However, one can have initial rounds with as few as a single juror.

Remember that, in Kleros, jurors are rewarded or penalized based on whether they are coherent with the decision of the last appeal round (see our white paper for more details on the incentive system).

So even a single juror in an initial round will have an incentive to analyze a case and vote for a decision that is unlikely to be overturned. This can still result in an effective list, particularly when cases are very straightforward, and not a lot of effort is required to recognize an incorrect decision and crowdfund an appeal.

In situations where significant effort is required to review the case and there is more friction in the appeal process, it can be better to guarantee a higher level of rigor in the initial decision by requiring more jurors. On the other hand, if you set a higher number of initial jurors, this will result in larger deposits being required to use your list, creating a barrier to its use.

The Submission Challenge Bounty

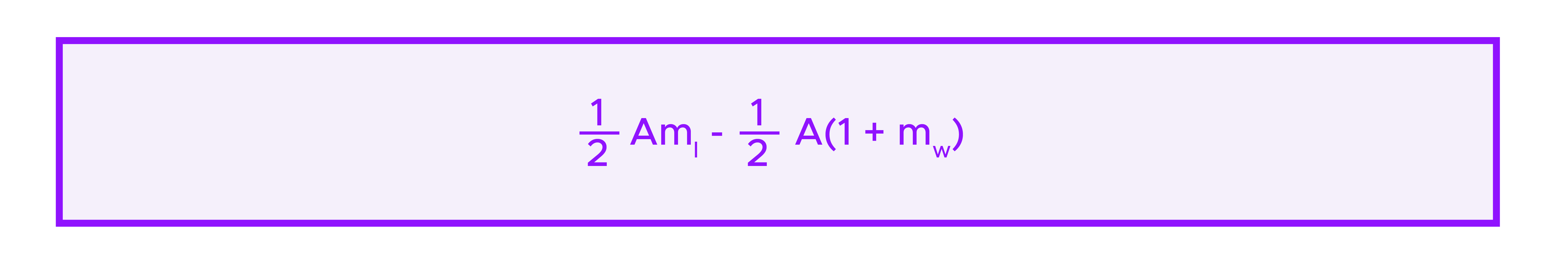

Already, any new submission to your list will require a minimum deposit of at least

where the fee per juror depends on which court you choose to arbitrate your list.

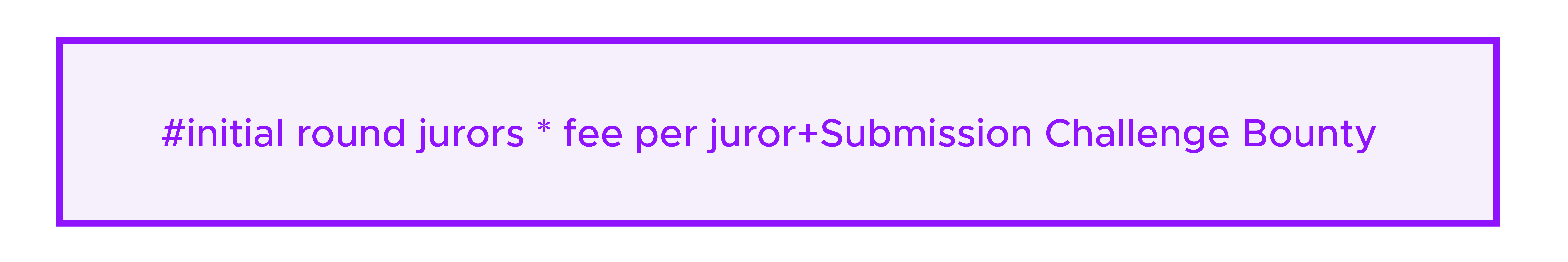

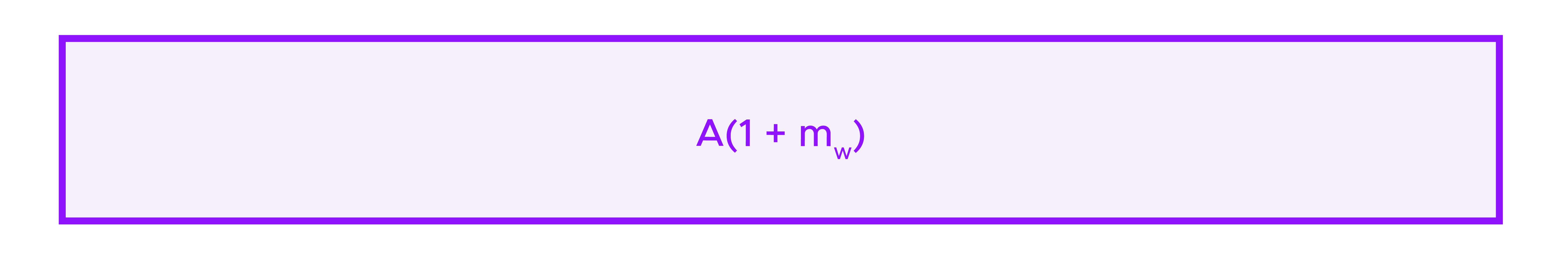

However, you typically want to require deposits that are somewhat higher than this. Indeed, jurors are not the only actors that will have to spend time and effort evaluating whether entries belong on your list. You also want to incentivize challengers to flag incorrect submissions.

For this, submission deposits should include a bounty that is paid to successful challengers. This amount is given by Submission Challenge Bounty, so the total amount that must be deposited with a submission is

Generally, if your Submission Challenge Bounty is too low, incorrect submissions will not be flagged for dispute. On the other hand, if your submission deposit is very high, challengers will be likely to catch malicious submissions, but people will only rarely submit to your list, so you will have a list that is difficult to attack but largely empty.

The task of the challenger is typically at least as difficult as that of the juror, because while the juror only has to concentrate on a single case and decide whether or not it conforms to requirements, the challenger must first sift through lists of pending submissions to try to pick out which entries seem suspicious.

However, they only receive compensation for successful challenges, and do not receive anything for any effort they might spend looking at submissions that they decide are ultimately correct and should not be flagged. As a result, properly incentivizing challengers is subtle; indeed if rewards for challengers are increased and malicious submissions are more likely to be flagged, then one can expect that the percentage of pending submissions that are worth flagging will go down, making the task of the challenger even more difficult. We call this effect the Challenger's Dilemma.

Hence, even for lists that are less rigorous, namely that accept a higher percentage of incorrect content in exchange for having lower fees, you probably want to take Submission Challenge Bounty to be at least 1-2 times the fee per juror in the court you choose. For more rigorous lists, you might want the Submission Challenge Bounty to be many times this.

Advanced options

At this point, we have covered the basic parameters that anyone creating a new list will have to consider. However, there are several other parameters that you can tune if you decide to go into the "Advanced Options". The UI has default values loaded for these parameters, but you might want to make more customized choices. The following discussion can help you do so.

Challenge Period Duration

You will want to consider the effort required to evaluate candidates to your list when choosing the Challenge Period Duration. If you set this value too low, challengers will not necessarily have time to flag incorrect submissions to your list and the quality of the list will decrease. On the other hand, if you set this value too high, your list will have unnecessary friction in responding to new entries.

More deposits

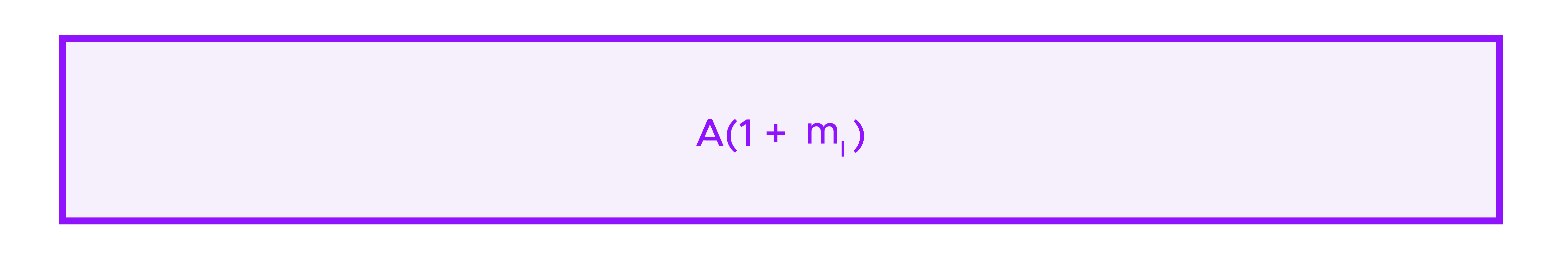

In addition to the Submission Challenge Bounty, there are several more parameters that you can tune that have an impact on the size of deposits. Particularly, challengers must also place a deposit when flagging a submission. Like for submitters, their deposit must cover at least the

that the jurors need to be paid in the event that the challenger loses her dispute. There is a tunable quantity that you can adjust, Incorrect Challenge Compensation, that mirrors Submission Challenge Bounty. This quantity is given to the submitter in the event that the submission is challenged but ultimately is ruled to be correct.

One typically wants Incorrect Challenge Compensation to be zero. A larger Incorrect Challenge Compensation provides some additional incentive for submitters, but this is generally not necessary. Being on the list should have enough value to be its own reward. However, a large Incorrect Challenge Compensation will have an effect similar to a low Submission Challenge Bounty, to discourage would-be challengers from participating and resulting in a larger percentage of malicious submissions getting through.

On the other hand, if you think that submissions are likely to be often incorrectly challenged, then you might consider a non-zero Incorrect Challenge Compensation. For example, if the arbitration fees that the challenger is required to pay are very low relative to the value of being on the list, one might get a fair number of trolls or spammers challenging submissions, and a non-zero Incorrect Challenge Compensation can help to protect against this.

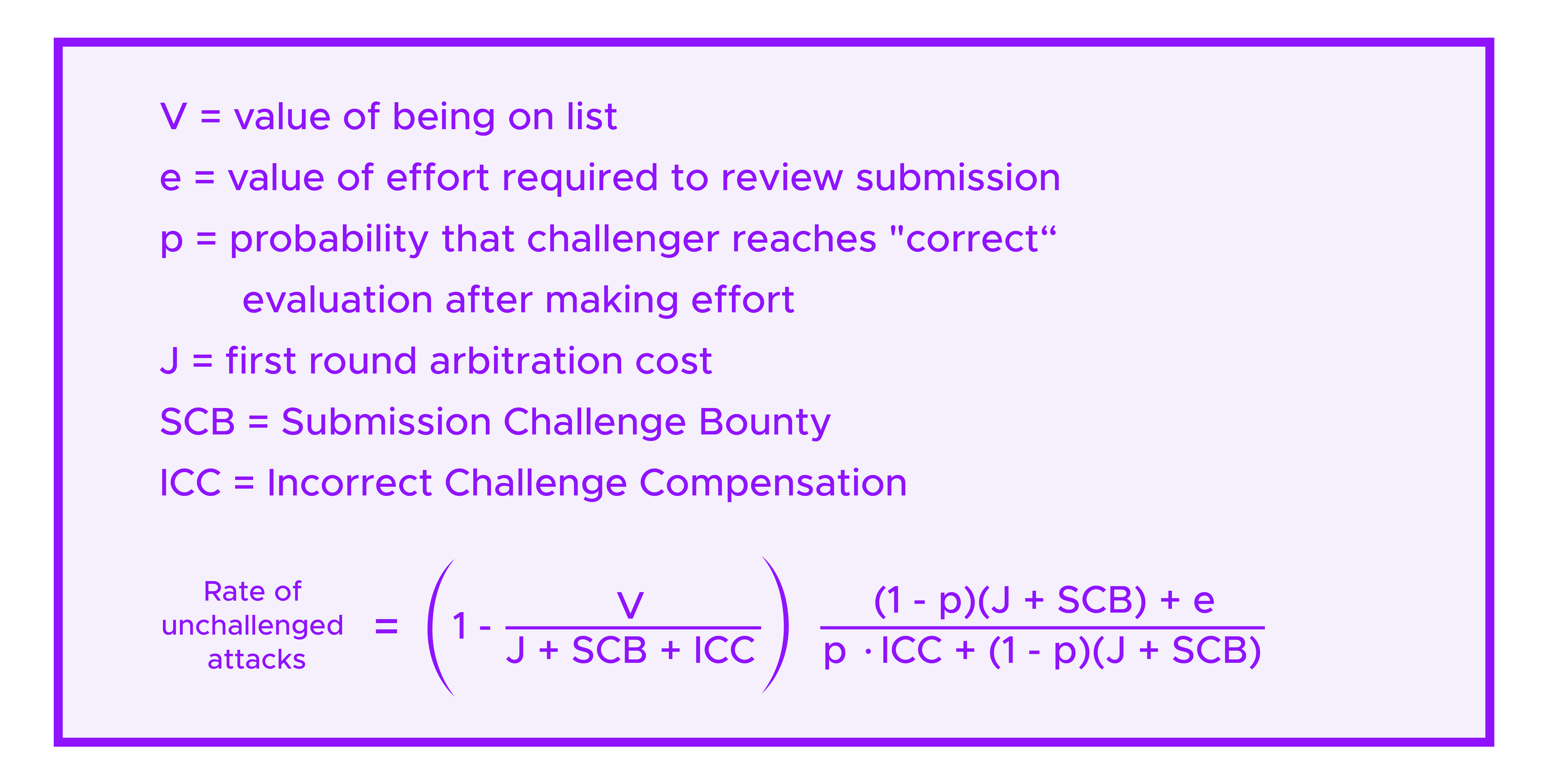

The interplay between the Submission Challenge Bounty and the Incorrect Challenge Compensation gets to the heart of incentivizing challengers, which can be rather subtle. For more reflections on this point, see our article on the "Challenger's Dilemma". There we are able to estimate the rate of malicious submissions that make it into to the list in equilibrium because they go unchallenged.

You want to make sure that you choose values for the parameters that will lead to the rate of malicious submissions that make it through unchallenged being acceptably low for your application.

In order to remove an element from a list, either because it was hostile and made it through the list's defenses or because it simply became outdated and no longer fulfills the criteria of the list, the structure of deposits is similar. The party proposing that an element be removed deposits the required arbitration fees plus Removal Challenge Bounty, and this can be challenged by a party that deposits the arbitration fees plus Incorrect Removal Challenge Compensation.

As a first approximation, as challengers of submissions and removals have similar tasks and should be compensated similarly, it would be reasonable to take Submission Challenge Bounty=Removal Challenge Bounty. If you have a good reason to expect that the rate of challengeable submissions will be much higher or lower than the rate of challengeable removals, then you might want to a more tuned relationship between these quantities. Namely, the challenges who have to spend the most effort sifting through correct submissions/removals for each entry that they can flag should receive higher rewards per successful challenge.

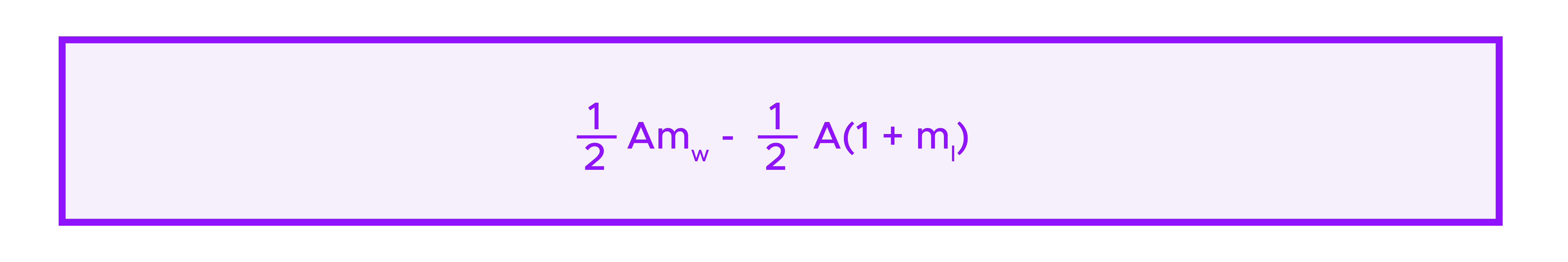

On the other hand, Incorrect Removal Challenge Compensation, the analog of the Incorrect Challenge Compensation here, plays a slightly different role. While submitters generally have some intrinsic motivation for their submission to be accepted on a list, it is not necessarily the case that users who clean the list by submitting removal requests will gain significant personal reward by doing so. Then one must consider their motivation to put up a deposit of

which can be lost if their removal is deemed invalid. Then, it might make sense to have

so that either

- a removal request goes unchallenged and the requester gets back her entire deposit and succeeds in cleaning the list, or

- a removal request is challenged, and while there is some risk that a well-intentioned requester will lose her deposit, if the requester wins the dispute she gets Incorrect Removal Challenge Compensation, compensating her for this risk.

More generally, if you choose to set Removal Challenge Bounty>Incorrect Removal Challenge Compensation, you are encouraging challengers over requesters, reducing the effects of the Challenger's Dilemma, and limiting the degree to which malicious removal requests succeed in taking legitimate content off the list at the expense of reducing the incentive of people to clean the list by making removal requests.

On the other hand, if you take Incorrect Removal Challenge Compensation>Removal Challenge Bounty, then you are encouraging people to clean the list by making removal requests, at the expense of exacerbating the Challenger's Dilemma and risking that legitimate content will be removed.

Stake Multipliers

The various stake multipliers control how much crowdfunders are rewarded for participating in the appeal process. In case of an appeal, both sides need to put in sufficient deposits that cover the fees paid to the jurors in the event that their side ultimately loses the dispute. In fact, both sides generally need to put in additional "stakes" beyond the amount required to pay the jurors. These "stakes" are used to incentivize "crowdfunders" to help parties by covering part of these fees. Specifically, the "stake" of the losing side is paid to whoever paid the fees of the winning side.

However, the two parties to the dispute are not generally required to pay the same amount of stake. Indeed, we typically want to have the winner of the previous round be required to pay less stake than the loser(s) of the previous round. Namely, one typically takes the "winner stake multiplier" to be less than the "loser stake multiplier". This gives the previous ruling some inertia, even as allowing for the possibility of appeals.

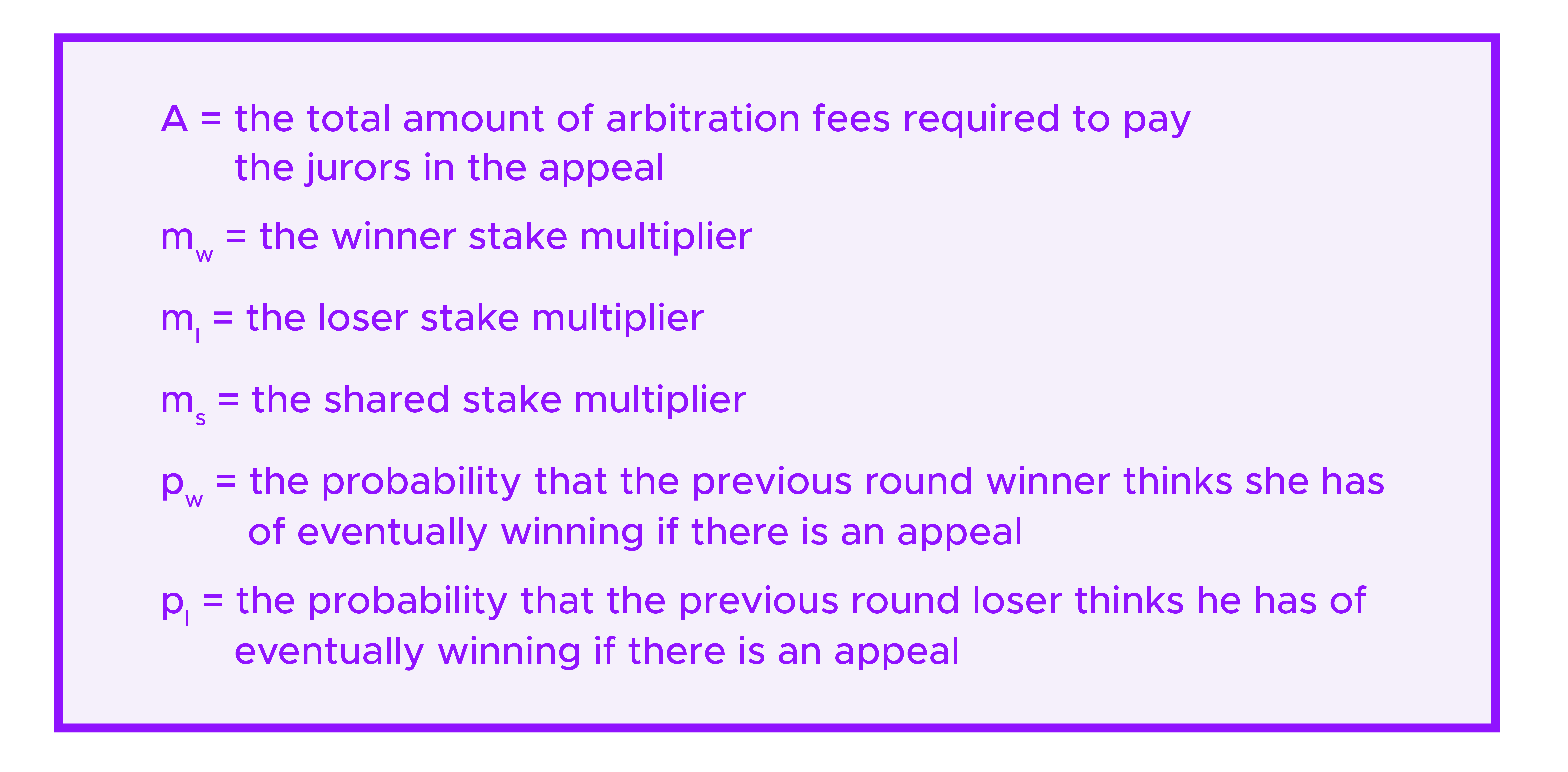

We denote:

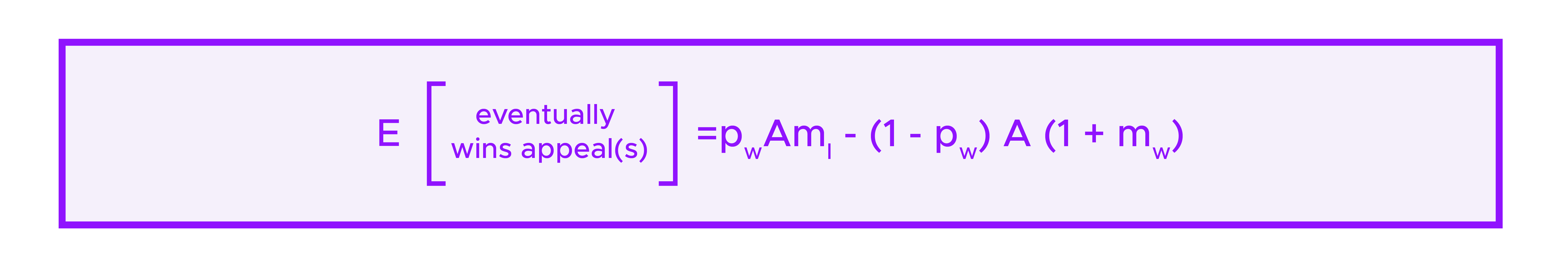

Then the total amount that has to be put in on the side of the previous round winner is

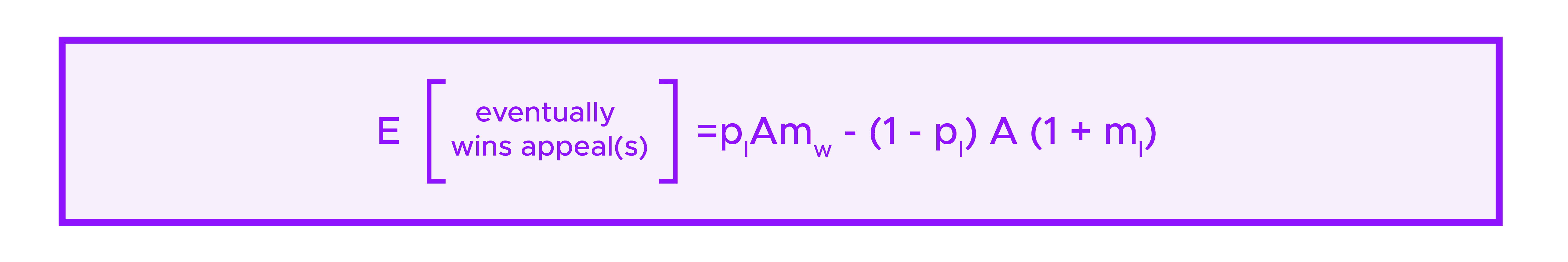

Similarly, the total amount that has to be put in on the side of the previous round loser is

So, if the previous round winner (or some crowdfunder on her side) decides to cover the appeal costs, her expected return is

Similarly, if the previous round loser (or some crowdfunder on his side) decides to cover the appeal costs, his expected return is

Note that if you think that a case is a 50-50 toss-up, then your expected values for crowdfunding the two sides are

and

Whatever one chooses for the stake multipliers, at least one of these quantities is negative. As some of the money put in by crowdfunders is used to pay jurors, the game being played between crowdfunders on opposite sides is negative sum. If mw=ml, then both of the expected values are negative and rational users will not crowdfund either side. One nevertheless expects that sometimes both sides of an appeal will be funded as crowdfunders or parties have different estimations of their respective chances.

If the stake multipliers are very large relative to A, then the amounts taken out of the game between crowdfunders on opposite sides become negligible and this game becomes approximately zero-sum. This means that more and more borderline cases would be funded by (sufficiently liquid) rational actors.

On the other hand, if the stake multipliers are very large, then that means that the total amounts required to appeal become large. So, even though the corresponding payout for funding a successful appeal also becomes large, it might become more difficult to find enough funders willing to participate.

Hence, the size of the stake multipliers gets at a trade-off between two different types of friction in the appeal system : 1) having large enough payouts for funders for crowdfunding to be worthwhile and 2) not having total appeal costs so large that they become a barrier.

We have generally estimated that stake multipliers between .5 and 2 seem reasonable. Then there is also the question of how much bigger ml should be compared to mw. For example, if mw=1 and ml=2, using the expected value formulas above, one sees that funders who think that the previous round winner has at least a 50% chance of eventually winning are incentivized to fund her side – this is gives a solid amount of weight to the previous round decision. On the other hand, with these choices one would need to estimate that the previous round loser has a 75% chance of eventually winning in order to fund him. For another example, if mw=.5 and ml=1, one would fund a previous round winner with a 60% chance of eventually winning and a previous round loser with a 75% chance of eventually winning. These thresholds might be considered acceptable in some contexts, and by choosing smaller values of mw and ml, the total amounts required to appeal decrease.

Finally, there is also the "shared stake multiplier" ms; this is similar to the other two, but it is used when the previous round is a tie. It might be reasonable to take ms as equal to mw, or maybe as the average of mw and ml.

Ultimately, your goal in choosing stake multipliers is to balance the different types of friction in the appeal process so that people will be incentivized to appeal incorrect decisions.

Choice of Governor

Of course, for all of the choices that we discussed above, situations can evolve and it might be necessary to update the parameters of your list. The ability to make such parameter updates lies with the "Governor" of the list. When setting up a new TCR, you can set the Governor of your list to be the Kleros governor. Then eventual users of your list will know that it is really decentralized while there is nevertheless a mechanism in place to make the updates that it will need.

On the other hand, you can also set an address you control to be the Governor of your new TCR. Then you will have more flexibility to make updates yourself, at the cost that would-be users of your list might be more skeptical that it will remain useful should you become hostile or inactive. Nevertheless, if you set the Governor of the TCR to be an address you control, you can later transfer this role to the Kleros governor.

Badges

After having made all of these choices, one also needs to set parameters for Badges associated to your list. This controls which other lists are seen in the interface as being connected to your list, allowing for interoperability between your list and others. Sometimes, if this functionality is not relevant to the goals of your list, you might set a Primary Document for the Badge that just says "Reject all badges".

However, if you have a more nuanced policy on allowing badges, then considering the structural role the Badge List plays in how your list operates, you probably want to have deposit amounts that are much higher than those to just submit elements to your list. Generally, all of the considerations and tradeoffs discussed above need to be repeated when setting the parameters for the Badge List.

Submitting to the List Registry

After you create your list, you may want to give it more visibility. Lists which are featured in the Kleros Curate interface are themselves determined by a global TCR. If you want your new list to be visible on curate.kleros.io, you will need to make sure that it meets the requirements of this global registry. Disputes about whether a new list meets these requirements or not are also resolved by Kleros jurors.

Hence, before submitting, make sure you read the policies of Curate to minimize the risk that your list is rejected and losing your deposit.

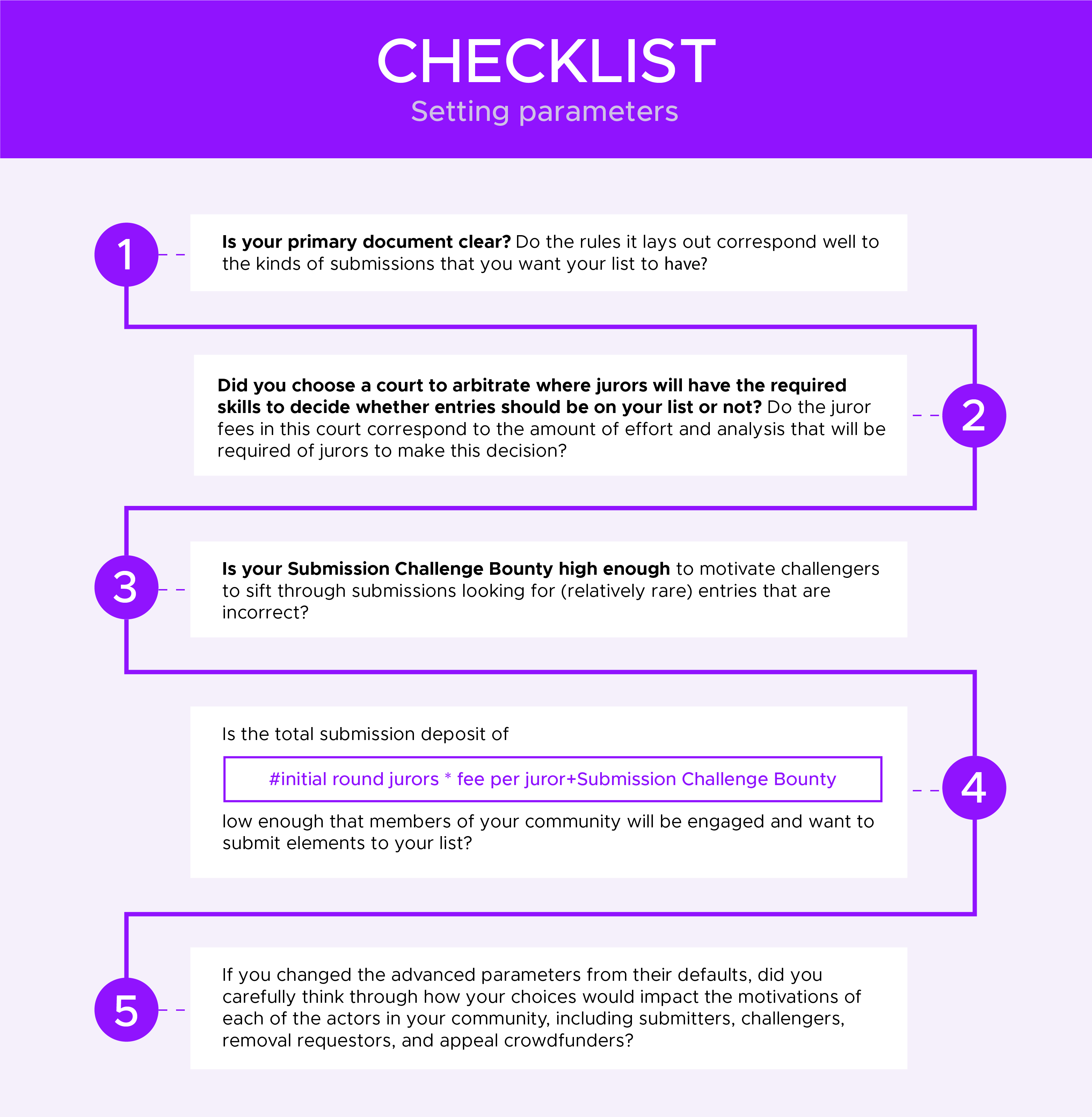

Conclusion

When setting up a new list, there's a number of subtle trade-offs that you can make in how you tune your parameter choices. When making many of these choices, you should ask yourself how rigorous you need your list to be. Namely, to what degree are you willing to set safeguards against incorrect submissions that also have the result of decreasing the number of legitimate submissions to your list.

On several of the choices above, we have described mathematical models that can help guide you in your analysis. However, you should also try to keep in mind the behavior of the actual community and what levels of deposits and potential returns you think will be sufficient to encourage them to participate as submitters, challengers, or crowdfunders. Ultimately, your list will only be useful if you manage to make parameter choices that encourage active participation from your community.

We understand that some of the tradeoffs that need to be made when setting up a list are subtle. If you have doubts about your choices, you can contact us for advice via Slack or in our dedicated Telegram for Curate Support.

Where Can I Find Out More?

Join the community chat on Telegram.

Visit our website.

Follow us on Twitter.

Join our Slack for developer conversations.

Contribute on Github.

Download our Book